In today’s world, where social media is a primary source of information, health misinformation has become a serious and widespread problem. Influencers and online platforms often share unverified or misleading health advice, leading to dangerous outcomes like vaccine hesitancy, the promotion of unproven treatments, and a growing distrust in science.

To better understand and address this issue, I spoke to Maren Hunsberger, a skilled science communicator and a scientist who has a remarkable talent for connecting the scientific community with the public. Hunsberger is a medical microbiologist who is also the founder and director of Brilliant B. Productions, a science media production company, and the founder and lead consultant of Scientifically Speaking Communications, a consulting agency specializing in science-centered information. She holds an MSc in Medical Microbiology from the London School of Hygiene & Tropical Medicine and an MSc in Science Communication from Imperial College London. Her work focuses on making complex topics, including health information, easy to understand, relatable, and trustworthy, especially in a landscape flooded with misinformation.

During our conversation, we explored the root causes of health misinformation, its disproportionate effects on vulnerable communities, and the creative strategies science communicators can use to combat it. We also explored how partnerships with influencers and policymakers can help prioritize public health literacy and build a more informed society. Through Maren’s professional insights, this interview sheds light on the vital role science communication plays in tackling one of the most urgent challenges of our time: the spread of health misinformation in the digital age.

Health misinformation, particularly on social media, has been linked to serious consequences like vaccine hesitancy and distrust in science. In your experience, what do you see as the root causes of this growing issue?

MH: I see two main dimensions to this issue: the human side and the social media side.

On the human side, misinformation often spreads because it taps into real emotional experiences. Most conspiracy theories and health myths are powerful because they contain a seed of truth. In this case, the truth is that many people, especially in the U.S., feel let down by the healthcare system. Their symptoms are dismissed, their conditions are misunderstood, and especially for folks from marginalized communities, the system often fails to meet their needs. That disillusionment is valid, and misinformation preys on it.

We also know from social psychology that personal experience overrides data. If someone has a severe reaction to a vaccine (or knows someone who did), that anecdote will carry more weight than any statistic. We’re storytelling creatures. We connect more deeply to emotion than to peer-reviewed studies, especially in moments of fear or uncertainty. And in those moments, people are desperate for answers and a sense of control. But science and medicine rarely offer simple, definitive answers. That makes people more vulnerable to anything that does offer certainty, even if it’s false. What we need is more transparency around how we know what we know, not just what we know. That kind of trust-building takes time, something many doctors simply don’t have due to systemic issues.

Then there’s the social media side. Algorithms, like our brains, prioritize the extreme and emotionally charged. Posts that say “you’ve been lied to” or offer an instant fix cut through the noise because they’re negative, urgent, and simple. In comparison, nuanced, evidence-based information without easy answers doesn’t stand a chance. So ultimately, it’s a feedback loop. Real emotional pain and systemic failure combine with platform-backed content bias to amplify outrage and deepen the divide. When we look at it through this lens, tackling misinformation isn’t just about correcting facts: it’s about restoring trust, establishing personal connection, and making people feel heard and cared for.

How does the spread of health misinformation disproportionately affect vulnerable populations, and what are some long-term societal risks if this trend continues unchecked?

MH: Health misinformation doesn’t affect everyone equally. Marginalized and underserved communities are often hit hardest, partly because of a long history of exclusion and harm at the hands of the medical system. In the U.S., clinical trials were mostly done on men for decades, and still are (albeit, to a smaller extent) today. This means that women and others have been left out of data that informs care today, with real implications for things like side effect profiles and dosage estimates. Deeply unethical experiments like the Tuskegee Syphilis Study, which only ended in 1972, have left a legacy of justified distrust. That distrust can be easily exploited, especially when misinformation is presented in ways that feel human and relatable. On top of that, many people still face barriers to accessing accurate health information. It’s not about intelligence or willingness to learn—it’s about the cost of that access, the time it takes to access it, and whether the information is presented in a way that actually works for people’s lives (including their cultures, languages, etc.).

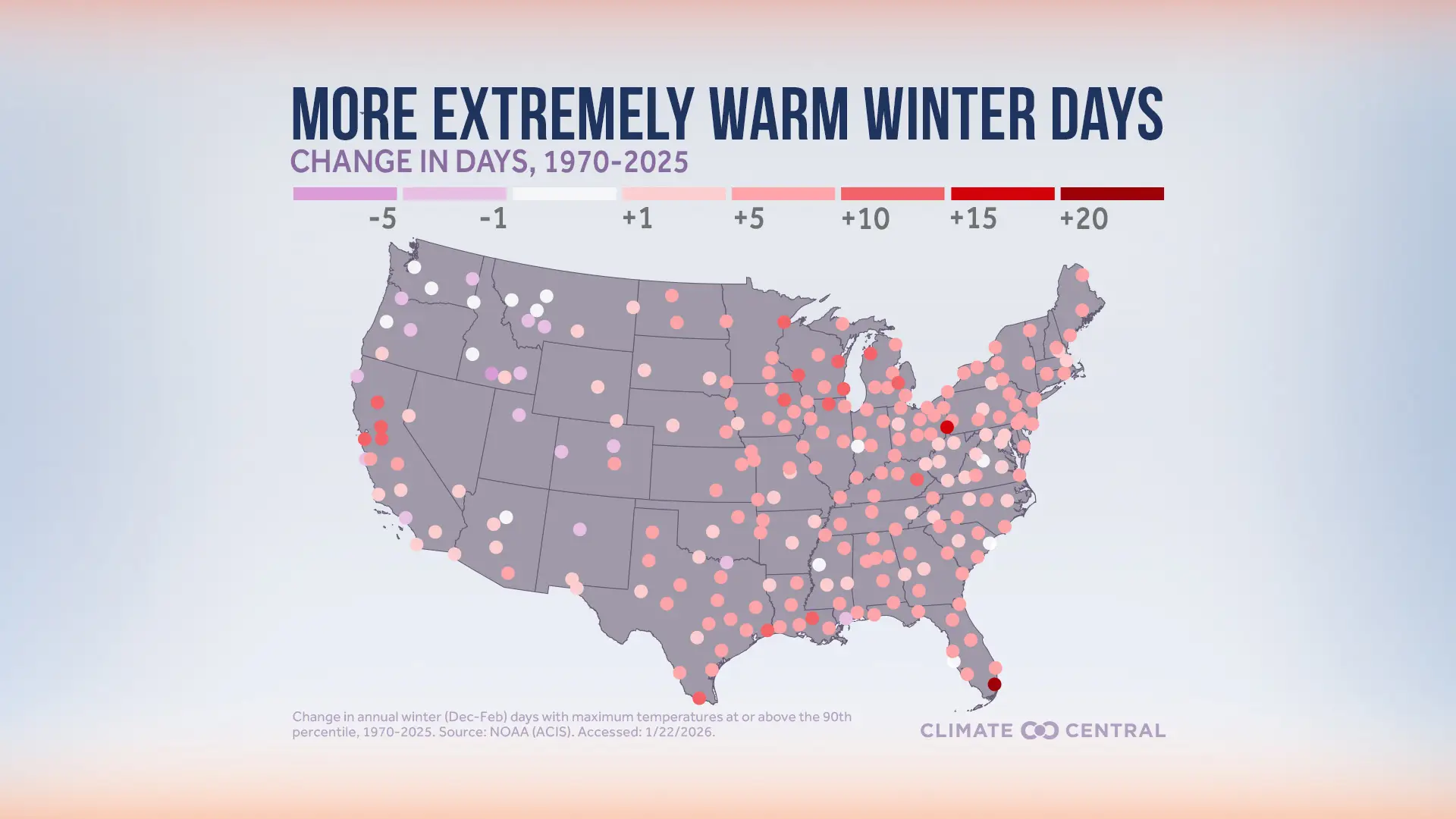

We are already feeling the serious consequences of this. Vaccines are the most effective public health intervention we have—for example, the U.S. was declared measles-free in 2000 thanks to vaccination coverage. But in 2025, we’ve already seen more than a thousand measles cases, up from under 300 last year, largely due to falling vaccination rates. As preventable diseases like measles return, we’re also likely to see a rise in the chronic health complications that accompany those diseases, especially in communities with less access to care. These outcomes put additional strain on already overstretched health systems and create a vicious cycle—people get sick, feel let down by the system, and their distrust deepens. That makes them even more vulnerable to future misinformation, especially in moments of crisis like a pandemic. Ultimately, unchecked health misinformation doesn’t just threaten individual well-being. It chips away at public trust, widens preexisting health disparities, and weakens our ability to address health concerns at their root.

What strategies have you found most effective in countering health misinformation while maintaining engagement with skeptical audiences? How can science communicators balance accuracy with accessibility?

MH: One of the things that’s worked best for me is de-escalating. Content that ‘calls people out’ or goes straight into debunking mode is very popular on social media because it’s algorithm fodder—it’s extreme, often negative, and appeals to people who already agree with the science, who then share the content and perpetuate its spread. This approach can make the original creator double down, especially if they already feel defensive. Instead, I try to understand where they’re coming from and find some shared ground. Like, if someone calls me a “pharmaceutical shill” for talking about why buying an unregulated parasite cleanse off of Amazon isn’t a good idea, I don’t immediately push back. I might say, “Yeah, I totally get why you’d be skeptical, the medical system is deeply flawed in a lot of ways.” That often catches them off guard in a good way and opens the door to a real conversation. Once people feel heard, they’re usually more willing to engage. People are pretty much never open to new information if they’re feeling attacked—you have to break down the wall of antagonism to have any chance of changing someone’s mind.

Another helpful tactic is framing science as a process, not an immutable set of absolute truths. People tend to tune out when science is presented as all-knowing or condescending. Instead of saying, “Here’s the truth,” I try to walk people through how we know what we know. Instead of just dropping a stat, I’ll explain how the study was done or why scientists trust a particular method. It opens up the process of science in a way that helps people feel more comfortable with the results than just saying “do it because the smart people told you to, they know better than you do.” Storytelling and analogies help a lot too—if I’m talking about vaccines, I might compare the immune system to a home security system. It helps people connect the dots without dumbing things down. And if I don’t know something, I just say so. There’s nothing wrong with admitting it and inviting the audience along to find the answer together. That kind of honesty engages while building trust, models a process of critical thinking, and shows that science is about curiosity and learning, not just having the “right” answer.

Science communicators often face challenges when trying to collaborate with social media influencers or policymakers. What steps can be taken to bridge these gaps and create meaningful partnerships that prioritize public health literacy?

MH: First, I think we have to accept that influencers—whether we like it or not—play a huge role in shaping how people understand the world around them. Instead of resisting that, it’s more productive to work with it. People feel a real connection to influencers, and that interpersonal trust carries weight. Policymakers, in particular, need to recognize and lean into this reality. Influencers and online communicators can reach audiences that traditional public health messaging simply can’t. To get ahead of misinformation, policymakers should be actively engaged with the social media landscape—not just pushing information from the top down, but being embedded in the ecosystem, aware of trends and conversations as they’re happening. And most importantly, it has to be a two-way street. Policymakers can’t just drop a press release and expect the online communication space to parrot it. They need to listen to what will actually work for each communicator and their audience, because monolithic, blanket messaging doesn’t work anymore. It needs to be nimble, adaptive, and tailored to each specific platform and community.

When it comes to science-specific influencers or science-adjacent voices, the most powerful thing we can do is openly acknowledge the limits of our knowledge. That kind of humility builds trust. When public guidance shifts, it can feel like betrayal if people thought they were being told absolute truths. But if we can do a better job of framing science as something that evolves with new evidence, we invite people into that learning process instead of alienating them. So whether we’re working with influencers, policymakers, or other communicators, the goal should be to build trust through transparency—and to create messaging that helps people feel like they’re part of that process, not on the receiving end of a lecture.

Looking ahead, how can we leverage technology, education, and policy changes to not only mitigate the current wave of health misinformation but also prevent similar crises in the future? What role do you envision for science communicators in shaping this future?

MH: Tackling health misinformation isn’t going to happen with just one solution—it’s going to take a multi-layered approach that brings together technology, education, and policy. On the tech side, social media platforms need to take more responsibility. That might mean stronger content moderation, better visibility for fact-checking tools, and tweaking algorithms so that accurate, trustworthy information doesn’t get buried under sensational stuff. At the same time, we can be using these platforms for good—like creating engaging, easy-to-understand science content that actually reaches people where they are.

Education is a huge part of this too. We need to be teaching media literacy and critical thinking skills early on, so people know how to assess sources and spot bias. Science communicators can help build these skills by creating resources that make those ideas feel relevant and doable. And then there’s policy—governments and institutions need to step up and support science literacy, whether that’s funding science communication programs, encouraging researchers to engage with the public, or pushing for more transparency in science and medicine. Science communicators have a key role to play across all of this—not just by putting out accurate content, but by building trust and keeping dialogue open. If we can work together to make change across these sectors, we have a real chance at pushing back against health misinformation and helping people feel more confident making informed choices about their health.

In your experience, what are some innovative ways science communicators can better engage the public with complex health information? Are there specific formats or platforms you find particularly effective for reaching diverse audiences?

MH: In addition to everything we’ve already touched on—formats, platforms, and storytelling styles—I think one of the most underutilized ways to engage the public with complex health information is to start with genuine curiosity about your audience. Not just curiosity about what they don’t know, but about who they are, what they care about, and why they might feel skeptical or resistant in the first place.

That means not reacting defensively when someone comes at you in the comments with something like, “What are you talking about? You just want everyone to stay sick.” It takes a lot of work to set your ego aside in those moments, but it’s essential to remember: It’s not about you. It’s about them—and about understanding why they feel that way. When you can meet that challenge with empathy and ask questions instead of making statements, you open the door to meaningful dialogue.

This is why I always come back to a Socratic approach. I use it in classrooms, in-person events, and online conversations. Instead of saying, “Here’s the fact,” I’ll ask, “What makes you feel that way?” or “How did you come to that conclusion?” When people feel seen and heard, they’re much more open to new perspectives. And when you’ve gathered more context about where they’re coming from, you can tailor your message so it resonates more deeply. It’s about guiding someone to re-evaluate their own beliefs rather than trying to override them with yours.

This approach is a skill. It’s slow, and it’s hard. It runs counter to our instinct as scientists and communicators to simply deliver knowledge. But asking good questions is just as important as providing good answers. I’ve even started thinking about how studying debate and rhetorical fallacies might sharpen this skill, because when you understand how arguments are constructed, you can ask questions that gently unravel misinformation and prompt self-reflection, rather than provoking defensiveness.

Of course, this works best on platforms that allow for actual dialogue. That’s why I think live-streaming formats—though intimidating—are full of potential. They allow for real-time engagement where the audience isn’t just consuming your content, they’re shaping it with their questions and reactions. Reddit is another great example. It’s been really fascinating to explore recently because it’s a space where knowledge is communally generated. You might be replying to one person, but countless others are watching that exchange—and learning from it too.

Ultimately, we need to lean into formats that allow for slower, messier, more human interactions. That’s where trust is built, and where the real work of science communication happens—not in broadcasting at people, but in creating space for people to explore their own thinking, together.

How can science communicators ensure that health information is not only accurate but also accessible and relatable to people from different cultural, educational, or socioeconomic backgrounds? What role do storytelling and empathy play in this process?

MH: The first step is to leave the jargon at the door. For many scientists, technical language is tied to credibility and status, because it’s the way we’ve been trained to speak and gain respect from our peers. But outside those circles, that same language can alienate people and reinforce power dynamics that already make science feel unapproachable. Communicators need to let go of the fear that plain language makes them sound less intelligent.

Like I’ve been mentioning a lot throughout this conversation, equally important is being willing to say “I don’t know.” It feels risky—especially for those trained in science where uncertainty can be seen as failure. But modeling that uncertainty, and walking someone through how you’d find an answer, instantly levels the playing field. That process-based, collaborative approach was central to a series I created for PBS called Fascinating Fails, which emulated the style and format of lifestyle or vlog content to reframe science as something messy, human, and iterative—something you can explore alongside the audience.

Empathy also plays a vital role, but it has limits. A communicator can be deeply empathetic, but they may still lack the lived experience necessary to connect meaningfully with a specific audience. That’s why it’s essential to elevate voices from within the communities you’re trying to reach. For example, when it comes to vaccine misinformation, the most powerful messenger to reach concerned parents may not be a researcher, but another parent: someone who shares the same stakes and fears. Authenticity and relatability come not just from emotion, but from shared context.

Storytelling is the final—and perhaps most powerful—tool. But it has to be audience-first. That means understanding what your audience already watches, what styles resonate emotionally, and how to mirror those formats to capture attention. Educational content doesn’t need to feel like a given standard—the classic static, talking-head explainer video. It can be styled like a beauty tutorial, a comedy sketch, or a travel vlog. If the format and structure feel familiar and welcoming, the ideas inside that content have a better chance of being heard. We need to lean into the formats that are already popular with our target audiences.

Ultimately, accuracy alone isn’t enough. Relatability, humility, and emotional resonance are what turn good science communication into meaningful public engagement.

Abdulmalik Adetola is a master’s student in the Media Innovation and Journalism program at UNR and a graduate assistant for the Hitchcock Project. He is a passionate advocate for health literacy and digital communication, dedicated to promoting accurate and accessible health information in the modern age.